Andrew Dai Introduced Semi-Supervised Sequence Learning

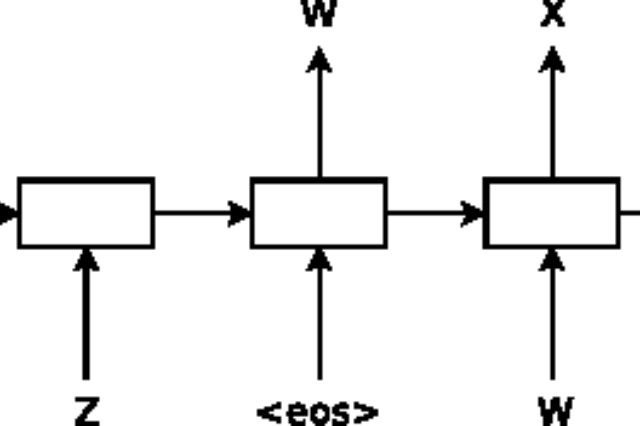

Andrew Dai from Google Brain demonstrated a unique language model approach known as Semi-Supervised Sequence Learning. Basically it was a dual approach model that can train a language model with both supervised and unsupervised learning. First apprach was to predict upcoming word in a sequence, which is conventional approach in Natural Language Processing (NLP). The second approach involves in Semi-Supervised Sequence Learning is sequence autoencoder. This autoencoder is kind of proofreader, means it reads input sequence into a vector and repredicts input phrase. These two approaches combined to establish unsupervised training model and can be subjected later to supervised learning models. Data suggested that combining two appraches result in more stablized and generalized results. Read more

November, 2015